-

- Downloads

Calibration & Planning/Calibration

parent

95417c2c

No related branches found

No related tags found

Showing

- docs/source/calibration/extrinsic_calibration.md 106 additions, 1 deletiondocs/source/calibration/extrinsic_calibration.md

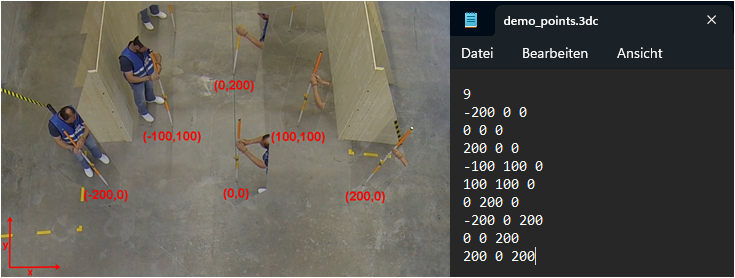

- docs/source/calibration/images/demo_points.3dc 10 additions, 0 deletionsdocs/source/calibration/images/demo_points.3dc

- docs/source/calibration/images/extrinsic_all_enlarged.png 0 additions, 0 deletionsdocs/source/calibration/images/extrinsic_all_enlarged.png

- docs/source/calibration/images/extrinsic_grid_and_points.png 0 additions, 0 deletionsdocs/source/calibration/images/extrinsic_grid_and_points.png

- docs/source/calibration/images/extrinsic_select_points.png 0 additions, 0 deletionsdocs/source/calibration/images/extrinsic_select_points.png

- docs/source/calibration/images/intrinsic_calibration_section.png 0 additions, 0 deletions...urce/calibration/images/intrinsic_calibration_section.png

- docs/source/calibration/images/photothumb.db 0 additions, 0 deletionsdocs/source/calibration/images/photothumb.db

- docs/source/calibration/images/points.png 0 additions, 0 deletionsdocs/source/calibration/images/points.png

- docs/source/calibration/images/pre_post_intrinsic.jpg 0 additions, 0 deletionsdocs/source/calibration/images/pre_post_intrinsic.jpg

- docs/source/calibration/intrinsic_calibration.md 56 additions, 2 deletionsdocs/source/calibration/intrinsic_calibration.md

- docs/source/planning/calibration.md 114 additions, 0 deletionsdocs/source/planning/calibration.md

- docs/source/planning/camera.md 106 additions, 44 deletionsdocs/source/planning/camera.md

- docs/source/planning/images/calibration_examples.jpg 0 additions, 0 deletionsdocs/source/planning/images/calibration_examples.jpg

- docs/source/planning/images/chessboard.jpg 0 additions, 0 deletionsdocs/source/planning/images/chessboard.jpg

- docs/source/planning/images/chessboard_border.jpg 0 additions, 0 deletionsdocs/source/planning/images/chessboard_border.jpg

- docs/source/planning/images/chessboard_coverage.jpg 0 additions, 0 deletionsdocs/source/planning/images/chessboard_coverage.jpg

- docs/source/planning/images/coordinate_grid.png 0 additions, 0 deletionsdocs/source/planning/images/coordinate_grid.png

- docs/source/planning/images/ranging_pole_level.png 0 additions, 0 deletionsdocs/source/planning/images/ranging_pole_level.png

- docs/source/planning/planning.md 1 addition, 0 deletionsdocs/source/planning/planning.md

842 KiB

253 KiB

540 KiB

54.7 KiB

docs/source/calibration/images/photothumb.db

0 → 100644

File added

docs/source/calibration/images/points.png

0 → 100644

6.26 KiB

420 KiB

docs/source/planning/calibration.md

0 → 100644

332 KiB

docs/source/planning/images/chessboard.jpg

0 → 100644

106 KiB

114 KiB

248 KiB

605 KiB

118 KiB